Subspace Clustering

Contents

23. Subspace Clustering#

High dimensional data-sets are now pervasive in various signal processing applications. For example, high resolution surveillance cameras are now commonplace generating millions of images continually. A major factor in the success of current generation signal processing algorithms is the fact that, even though these data-sets are high dimensional, their intrinsic dimension is often much smaller than the dimension of the ambient space.

One resorts to inferring (or learning) a quantitative model

In absence of training data, the problem of modeling falls into the category of unsupervised learning. There are two common viewpoints of data modeling. A statistical viewpoint assumes that data points are random samples from a probabilistic distribution. Statistical models try to learn the distribution from the dataset. In contrast, a geometrical viewpoint assumes that data points belong to a geometrical object (a smooth manifold or a topological space). A geometrical model attempts to learn the shape of the object to which the data points belong. Examples of statistical modeling include maximum likelihood, maximum a posteriori estimates, Bayesian models etc. An example of geometrical models is Principal Component Analysis (PCA) which assumes that data is drawn from a low dimensional subspace of the high dimensional ambient space. PCA is simple to implement and has found tremendous success in different fields e.g., pattern recognition, data compression, image processing, computer vision, etc. 1.

The assumption that all the data points in a data set could be

drawn from a single model however happens

to be a stretched one. In practice, it often occurs that

if we group or segment the data set

Consider the problem of vanishing point detection in computer

vision. Under perspective projection, a group of parallel lines

pass through a common point in the image plane which is known as

the vanishing point for the group. For a typical scene consisting

of multiple sets of parallel lines, the problem of detecting

all vanishing points in the image plane

from the set of edge segments (identified in the image) can be

transformed into clustering points (in edge segments) into

multiple 2D subspaces in

In the Motion segmentation problem, an image

sequence consisting of multiple moving objects is

segmented so that each segment consists of motion

from only one object. This is a fundamental problem

in applications such as motion capture, vision based navigation,

target tracking and surveillance. We first track the

trajectories of feature points (from all objects) over the image

sequence. It has been shown (see sec:motion_segmentation)

that trajectories of feature points for rigid motion

for a single object form a low dimensional subspace.

Thus motion segmentation problem can be solved by

segmenting the feature point trajectories

for different objects separately and estimating

the motion of each object from corresponding trajectories.

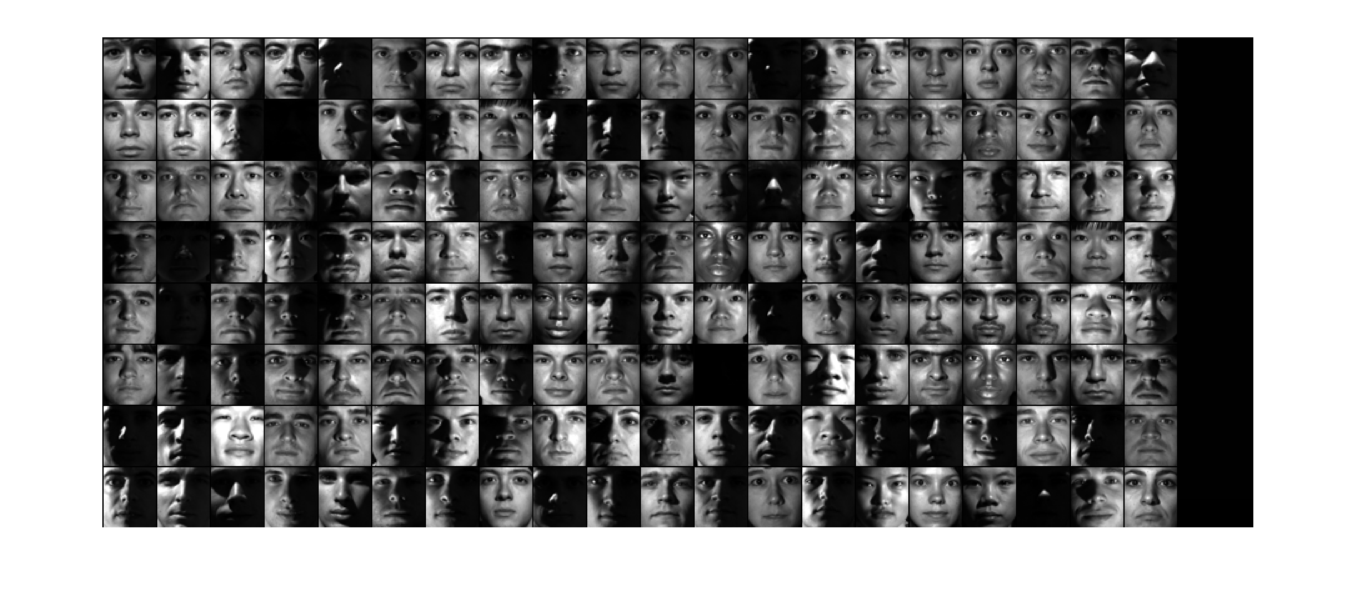

In a face clustering problem, we have a collection of unlabeled images of different faces taken under varying illumination conditions. Our goal is to cluster, images of the same face in one group each. For a Lambertian object, it has been shown that the set of images taken under different lighting conditions forms a cone in the image space. This cone can be well approximated by a low-dimensional subspace [5, 49]. The images of the face of each person form one low dimensional subspace and the face clustering problem reduces to clustering the collection of images to multiple subspaces.

Fig. 23.1 A sample of faces from the Extended Yale Faces dataset B [40]. It contains 16128 images of 28 human subjects under 9 poses and 64 illumination conditions.#

As the examples above suggest, a typical hybrid model

for a mixed data set consists of multiple primitive models

where each primitive is a (low dimensional) subspace.

The data set is modeled as being sampled from a collection

or arrangement

An example of a statistical hybrid model is a Gaussian Mixture Model (GMM) where one assumes that the sample points are drawn independently from a mixture of Gaussian distributions. A way of estimating such a mixture model is the expectation maximization (EM) method.

The fundamental difficulty in the estimation of hybrid models is the “chicken-and-egg” relationship between data segmentation and model estimation. If the data segmentation was known, one could easily fit a primitive model to each subset. Alternatively, if the constituent primitive models were known, one could easily segment the data by choosing the best model for each data point. An iterative approach starts with an initial (hopefully good) guess of primitive models or data segments. It then alternates between estimating the models for each segment and segmenting the data based on current primitive models till the solution converges. On the contrary, a global algorithm can perform the segmentation and primitive modeling simultaneously. In the sequel, we will look at a variety of algorithms for solving the subspace clustering problem.

23.1. Notation#

First some general notation for vectors and matrices.

For a vector

Let

Clearly,

Clearly,

We note that while

We use

We use

We use

23.1.1. Problem Formulation#

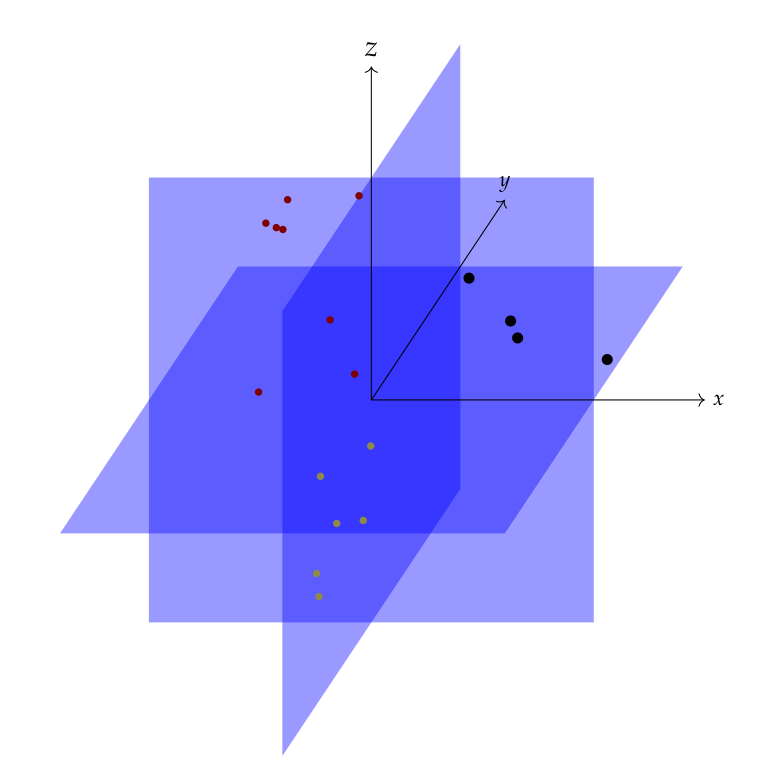

The data set can be modeled as a set of data points

lying in a union of low dimensional linear or

affine subspaces in a Euclidean space

Let the data set be

We put the data points together in a data matrix as

The data matrix

Note that in statistic books, data samples are placed in each row of the data matrix. We are putting data samples in each column of the data matrix.

We will slightly abuse the notation and let

We will use the terms data points and vectors interchangeably.

Let the vectors be drawn from a set of

The number of subspaces may not be known in advance.

The subspaces are indexed by a variable

The

Let the (linear or affine) dimension of

Here

We may or may not know

We assume that none of the subspaces is contained in another.

A pair of subspaces may not intersect (e.g. parallel lines or planes), may have a trivial intersection (lines passing through origin), or a non-trivial intersection (two planes intersecting at a line).

The collection of subspaces may also be independent or disjoint (see Linear Algebra).

The vectors in

Thus, we can write

where

This segmentation is straight-forward if the (affine) subspaces do not intersect or the subspaces intersect trivially at one point (e.g. any pair of linear subspaces passes through origin).

Let there be

We may not have any prior information about the number of points in individual subspaces.

We do typically require that there are enough vectors drawn from each subspace so that they can span the corresponding subspace.

This requirement may vary for individual subspace clustering algorithms.

For example, for linear subspaces, sparse representation based algorithms require that whenever a vector is removed from

This guarantees that every vector in

The minimum required

Let

Then, the subspaces can be described as

For linear subspaces,

We will abuse

The basic objective of subspace clustering algorithms is to obtain a clustering or segmentation of vectors in

This involves finding out the number of subspaces/clusters

Alternatively, if we can identify

Since the clusters fall into different subspaces, as part of subspace clustering, we may also identify the dimensions

These quantities emerge due to modeling the clustering problem as a subspace clustering problem.

However, they are not essential outputs of the subspace clustering algorithms.

Some subspace clustering algorithms may not calculate them, yet they are useful in the analysis of the algorithm.

See Data Clustering for a quick review of data clustering terminology.

23.1.2. Noisy Case#

We also consider clustering of data points which are contaminated with noise.

The data points do not perfectly lie in a subspace but can be approximated as a sum of a component which lies perfectly in a subspace and a noise component.

Let

be the

The clustering problem remains the same. Our goal would be to characterize the behavior of the clustering algorithm in the presence of noise at different levels.

23.2. Linear Algebra#

This section reviews some useful concepts from linear algebra relevant for the chapter.

A collection of linear subspaces

A collection of linear subspaces is called disjoint if they are pairwise independent [33]. In other words, every pair of subspaces intersect only at the origin.

23.2.1. Affine Subspaces#

For a detailed introduction to affine concepts, see [54].

For a vector

The image of any set

A translation of space is a one to one isometry of

A translate of a

Flats of dimension 1, 2, and

Flats are also known as affine subspaces.

Every

Flats are closed sets.

Affine combinations

An affine combination of the vectors

Thus,

The set of affine combinations of a set of vectors

A finite set of vectors

Otherwise, the set is affinely dependent.

A finite set of two or more vectors is affine independent if and only if none of them is an affine combination of the others.

Vectors vs. Points

We often use capital letters to denote points and bold small letters to denote vectors.

The origin is referred to by the letter

An n-tuple

In basic linear algebra, the terms vector and point are used interchangeably.

While discussing geometrical concepts (affine or convex sets etc.), it is useful to distinguish between vectors and points.

When the terms “dependent” and “independent” are used without qualification to points, they refer to affine dependence/independence.

When used for vectors, they mean linear dependence/independence.

The span of

Every

Each set of

Each point of a

The coefficients of the affine combination of a point are the affine coordinates of the point in the given affine basis of the

A

This can be easily obtained by choosing an affine basis for the flat and constructing its linear span.

Affine functions

A function

If

A property which is invariant under an affine mapping is called affine invariant.

The image of a flat under an affine function is a flat.

Every affine function differs from a linear function by a translation.

Affine functionals

A functional is an affine functional if and only if there exists a unique vector

Affine functionals are continuous.

If

23.2.2. Hyperplanes and Halfspaces#

Corresponding to a hyperplane

The vector

All non-null vectors

The directions of

Conversely, the graph of

We can find a unit norm normal vector for

Each point

Distance of the point

The coordinate

Hyperplanes

This occurs if and only if they have a common normal direction.

They are different constant sets of the same linear functional.

If

Half-spaces

If

The graphs of

Corresponding to a hyperplane

Open half spaces are open sets and closed half spaces are closed sets.

If

23.2.3. General Position#

A general position for a set of points or other geometric objects is a notion of genericity.

It means the general case situation as opposed to more special and coincidental cases.

For example, generically, two lines in a plane intersect in a single point.

The special cases are when the two lines are either parallel or coincident.

Three points in a plane in general are not collinear.

If they are, then it is a degenerate case.

A set of

In general, a set of

23.3. Matrix Factorizations#

23.3.1. Singular Value Decomposition#

A non-negative real value

The vectors

For every

The decomposition of

The first

The rank of

The eigen values of positive semi-definite matrices

Specifically,

We can rewrite

The spectral radius and

The Moore-Penrose pseudo-inverse of

Further,

The non-zero singular values of

Geometrically, singular values of

(i.e. image of the unit sphere under

Thus, if

23.4. Principal Angles#

If

In other words, we try to find unit norm vectors in the two spaces which are maximally aligned with each other.

The angle between them is the smallest principal angle.

Note that

If we have

We then compute the inner product matrix

The SVD of

In particular, the smallest principal angle is given by

- 1

PCA can also be viewed as a statistical model. When the data points are independent samples drawn from a Gaussian distribution, the geometric formulation of PCA coincides with its statistical formulation.

- 2

We would use the terms arrangement and union interchangeably. For more discussion see Algebraic Geometry.